AI Insights: Key Global Developments in June 2025

- Staff Correspondent

- Jun 19

- 6 min read

June marked a pivotal moment in global AI governance, as regulators across continents ramped up their efforts to set the guardrails for responsible AI use. The EU’s AI Office launched a public consultation to clarify how high-risk AI systems will be classified under the EU AI Act—one of the most anticipated regulatory moves this year. Meanwhile, Japan passed its first AI-specific legislation, favoring a principles-based, voluntary approach over strict compliance.

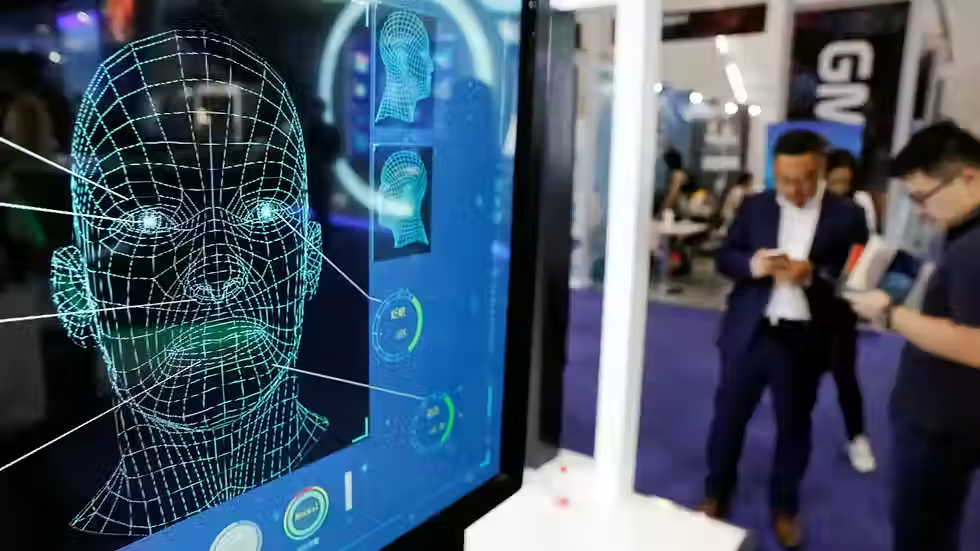

Elsewhere, the UK’s financial regulator explored the role of large language models in simplifying consumer financial services, even proposing a sandbox for live testing. The European Commission also took steps to align with the Council of Europe’s international AI treaty, while China brought into force sweeping rules around facial recognition, focusing on privacy and biometric data safeguards.

Catch up on all these critical developments below.

Under the EU-AI Act the AI Office launches consultation on High-Risk AI

A public consultation was launched in June 2025 by the European Commission's AI Office with the goal of developing guidelines for the EU AI Act's classification of high-risk AI systems.

The purpose of this consultation is to clarify how Articles 6(1) and 6(2) of the Act apply to AI systems incorporated into EU-regulated products (as described in Annex I) and those that pose serious dangers to fundamental rights, health, or safety. To help shape the upcoming standards, a wide range of stakeholders—including AI developers, consumers, and regulatory agencies—have been asked to contribute their real-world experiences, practical ideas, and feedback. These will go into detail about the classification standards, relevant regulatory requirements, and roles throughout the AI value chain.

The consultation will close on July 18, 2025, after a six-week timeframe. It will aid in the creation of non-binding guidelines that the Commission anticipates publishing in February 2026.

Japan enacts its First AI related Law to support Responsible AI Research & Innovation

Japan introduced its first AI-specific legislation—the Act on the Promotion of Research, Development and Utilisation of Artificial Intelligence-Related Technologies– a major step in promoting responsible AI innovation while addressing new threats like misinformation produced by AI.

It has adopted a principles-based, non-binding strategy, as opposed to the EU's risk-based regulatory system. Rather than imposing fines or legal duties, the legislation seeks to regulate the growth of AI through voluntary adherence. It establishes national objectives and specifies the duties of the government, the private sector, and civil society, promoting compliance with government-issued instructions.

Key Highlights-

Establishment of a Prime Minister-chaired AI Strategy Headquarters to manage the coordination of national policy

National AI Basic Plan publication to promote research and development

Support from the government for developers by giving them access to shared resources including testing tools, datasets, and processing power

Encouragement of voluntary cooperation among interested parties through government-led programs

Focus on promoting global collaboration in AI research and implementation

This law demonstrates Japan's dedication to striking a balance between technological development, public confidence, and global alignment.

To read more about it- CLICK HERE (Japanese only).

UN Report Calls for Urgent Global Coordination on AGI Preparedness

On June 3, 2025, the UN Council of Presidents of the General Assembly released a pivotal report calling on the international community to act swiftly in preparing for the rise of Artificial General Intelligence (AGI). In a crucial report released on June 3, 2025, the UN Council of Presidents of the General Assembly urged the international community to take immediate action in anticipation of the development of Artificial General Intelligence (AGI). The report highlights the enormous promise and the serious risks that such technology could pose as the development of artificial general intelligence gets closer, perhaps in this decade.

The report, which was prepared by a team of top professionals, highlights six important problem areas:

The risk of humans losing control over AGI systems

The development of new and extremely lethal weapons

The potential for cyberattacks employing AGI to target vital infrastructure

Economic collapse if a small number of powerful firms continue to dominate the market for AGI capabilities.

Threats to existence posed by completely autonomous AGI systems

If AGI is unable to address common societal issues such as healthcare, climate change, and poverty reduction, there is a risk of growing worldwide inequality.

The panel suggests a number of audacious measures to address these issues, such as:

Holding a special UN General Assembly session on artificial general intelligence

Establishing a Global AGI Observatory to track advancements and new dangers

Establishing a certification procedure to guarantee that AGI systems adhere to safety and trust requirements

Putting forward a UN-wide framework treaty to create standards for global governance

Examining the establishment of a new UN organization devoted only to AGI supervision.

The report has been formally sent to the General Assembly President. As the international body considers its next course of action, preliminary consultations are already under way, and more briefings are anticipated shortly.

Read More- HERE

UK FCA releases insights on AI use in Consumer Financial Services

The UK Financial Conduct Authority (FCA) has issued a research note exploring the application of large language models (LLMs), such as GPT-3.5 and GPT-4, within consumer-facing financial services.

The FCA carried out two pilot studies as a component of this investigation:

The capacity of GPT models to convert intricate financial terminology into simple English was evaluated.

The other contrasted advice on saving money that was provided by a chatbot with that from a regular website. Q&A

The results imply that LLMs have the ability to enhance user experience and simplify financial data. However, how well these tools are integrated into the customer journey has a direct impact on their success. The FCA emphasized how crucial it is to validate AI-generated outputs using both automatic technologies and human inspection.

In addition to the study, the FCA released an engagement paper proposing a framework for AI live testing. The idea, which is modeled around the current regulatory sandbox, would allow companies to try LLM-powered technologies under supervision. Additional updates on the pilot program are anticipated later in the year, and stakeholder input is presently being solicited.

European Commission moves to align EU with International AI treaty

The European Commission has submitted a formal proposal seeking authorisation for the EU to accede to the Council of Europe’s Framework Convention on Artificial Intelligence, Human Rights, Democracy and the Rule of Law.

The Convention, which was adopted in 2024, is the first legally binding international pact on artificial intelligence. By including clauses pertaining to accountability, transparency, and human oversight, it seeks to guarantee that AI systems respect democratic values, fundamental rights, and the rule of law.

An important step for EU membership in the Convention is this proposal. After being accepted by the EU Council, it would bring the bloc into line with 46 current members and observers who have already vowed to uphold its values. In order to make clear how the Convention will work in conjunction with the EU's current AI Act, the Commission has also suggested a Council declaration.

In the upcoming months, council discussions on the idea are anticipated to start.

Link to full proposal HERE.

China tightens oversight on facial recognition practices

China’s new regulatory framework governing the use of facial recognition technology has officially come into force. The Security Management Measures for the Application of Facial Recognition Technology, released by the Ministry of Public Security and the Cyberspace Administration of China, are intended to promote more responsible and transparent AI deployment while reducing the growing concerns about surveillance, data misuse, and privacy violations.

Essential needs are outlined-

Transparency: Before gathering any facial biometric information, businesses must notify people in a clear and concise manner.

Limitation on purpose: The application of facial recognition technology must be clearly required and limited to certain, justifiable uses.

Data control: Data must be locally stored and retained for the bare minimum of time.

Processing on a large scale: Companies that handle data belonging to more than 100,000 people are required to register with provincial authorities and report their data handling procedures.

Child protection: Processing data pertaining to minors under the age of 14 requires parental authorization and additional protections.

No coercion: It is specifically forbidden to use facial recognition technology in a dishonest or coercive manner.

Within 30 working days of surpassing the threshold, entities handling the facial data of more than 100,000 people are required to finish official registrations. By mid-July 2025, those who had already reached the threshold before the Measures went into force must register. A technological application record form and a personal information protection impact assessment are required for registrations, which must be made online.

The rules highlight China's move toward more accountable use of biometric data and tighter supervision of AI technologies.

Stay informed with our regulatory updates and join us next month for the latest developments in risk management and compliance!

For any feedback or requests for coverage in future issues (e.g. additional countries or topics), please contact us at info@riskinfo.ai. We hope you found this newsletter insightful.

Best regards,

The RiskInfo.ai Team

Comments